pBM project Beautiful Mind2024

Form Design Assist AI Program

Architectural Intelligence / Artificial Intelligence

- INDUCTION DESIGN(1990)

- SUBWAY STATION / IIDABASHI(2000)

- ALGOrithmic Design(2001 - )

- WEB FRAME -Ⅱ(2011)

- ALGODEX(2012)

- ALGODeQ (international programming competition)(2013 / 14)

- AItect(2022)

- pBM project Beautiful Mind2024

- SUBWAY STATION / IIDABASHI - ventilation tower(2000)

- KeiRiki program series(2003 - )

- ShinMinamata MON(2005)

- AItect(2022)

Objective

The purpose of pBM is to support the designer in the act of design.

Support here does not mean efficiency or labor saving, but rather improving the quality of the design. This "support area" is specific to the "form" of the design object.

The "improvement of quality" is obtained by presenting (without direct instructions from the designer) the forms that the designer potentially desires but cannot find for himself.

The purpose of pBM is to develop a computer program and its UI that can fulfill this purpose.

(Part of the research and development of pBM was funded by CREST / Japan Science and Technology Agency JST)

Background

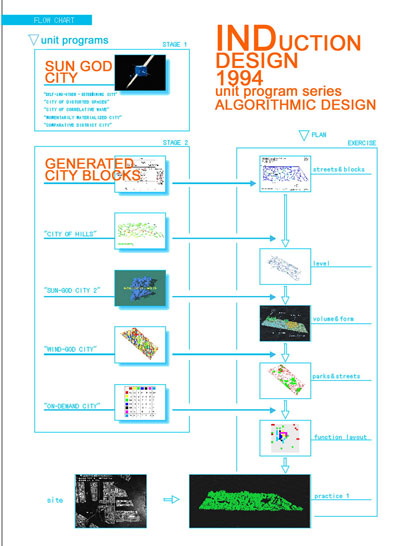

The development of pBM can be traced back to "INDUCTION CITIES " (1994).

In the "INDUCTION CITIES" project, a program was created to solve each of the various planning functions required of cities and buildings, and the goal was to create a city/building that would satisfy the requirements through the collection of these functions.

Later, part of this functionality was put into practice in the "WEB FRAME" of the "SUBWAY STATION / IIDABASHI" (2000, AIJ Award, etc.).

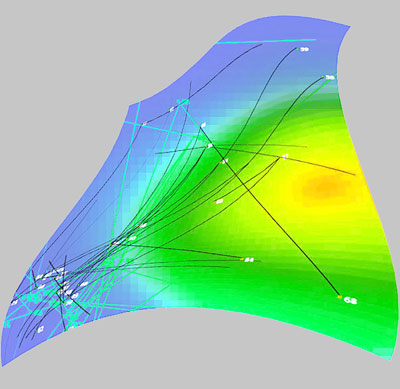

Furthermore, we developed a "program of Flow" (MITOU programs for the next generation IT innovation leaders by IPA) that uses neural network AI to generate forms interactively, and implemented it in the "

TX Kashiwanoha-Campus Station" (2004).

In addition, we developed the "KeiRiki" series, a program that integrates structural mechanics and generative programming (in collaboration with Makoto Ohsaki

/ Kyoto University), and implemented it on the "

ShinMinamata MON" (2005).

The pBM is positioned as a developmental part of these series of research and development.

Subject

Ref. → AItect-pBM part

- Why specialize in form

- → it seems to be the most difficult task for Ai

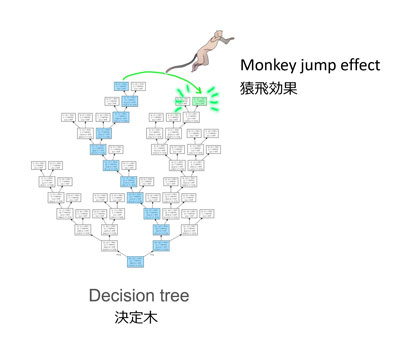

- Why is it difficult - "Monkey Jump effect"

- → Human decision making is sometimes a leap

- Why not to use NN-generative AI

- → to achieve the goal without using a large amount of teacher data

- Why use existing CG applications

- → because the main focus of pBM is versatility

Methods - Development

pBM is not a NN (Neural Network).

It uses an original AI engine developed by our collaborator, prof. Issei Sato / the University of Tokyo.

This AI is one that uses Gaussian processes, a Bayesian Regression model.

Unlike general generative AI with LLM using NN, pBM does not require prior training with large amounts of training data.

The user begins a dialogue with an AI in "nothingness," so to speak, rather than with an AI that has been trained with teaching data.

This article does not explain the theory of this AI, but rather describes a design support program = pBM that uses this AI.

In order to adapt this engine to the purpose of pBM, the process of joint development was to repeat "creation of a pBM program including AI, trial of pBM, recognition of issues, formulation of program revision direction, program revision, and re-trial.

Some may think that the development process is just a matter of linking the AI program and the CG application, but it is not that simple.

Even a single action of "returning an estimated value based on a user's operation" does not have a single "returned value.

The values returned vary greatly depending on the choice of multiple parameters on the AI program.

By repeating this "operation-response" process, the value = solution can go in any direction.

In addition to the selection of parameter that vary the value to be returned, it is necessary to set the alternatives for the result, the branches of the history, and to adjust the value limiter.

Furthermore, the AI program itself must be improved to avoid falling into loops and unexpected convergence.

The process is repeated by performing many trials with the program, checking the values, adjusting them to values that are considered appropriate, and then performing the trials again.

In addition, some phenomena appear only after many trials, and it is necessary to investigate the causes and formulate countermeasures.

Since each member has other full-time work, this development cannot be done "in one fell swoop," but rather intermittently.

The work was done by each member, initially meeting once a month and once a week during the peak season to integrate the work, but after the the viral pandemic, all work was moved online.

The pBM, which was partially initiated around 2010 and fully deployed in 2015, was largely completed in 2023. (Part of the development period corresponds to the CREST period.)

This means that it took 8 years of research and development.

(As mentioned above, there were also many interruptions during the busy season.)

Compared to the recent development speed of generative AI, where new features are released every few days, this development seems to be on a different time scale.

Composition - Actuation

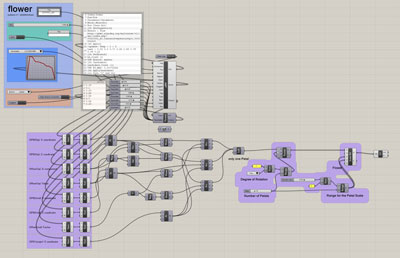

pBM consists of three parts.

One is the CG engine, another is the AI engine, and the third is the user interface (hereinafter referred to as UI), which contains the programs that integrate them.

For the CG engine, we chose Grasshopper/Rhinoceros, which can be customized by scripts and is widely used in architectural design and education. The AI is an original product as described above.

The game engine Unity was used for the integration interface.

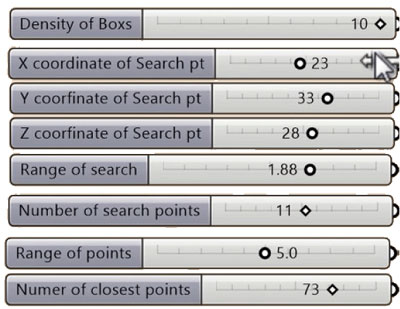

The usual procedure is to find the desired form by manipulating the sliders of several parameters one by one.

The UI until the middle stage of development was somewhat complicated because it was customized from the GH interface, but the Unity version has a clean and simple UI.

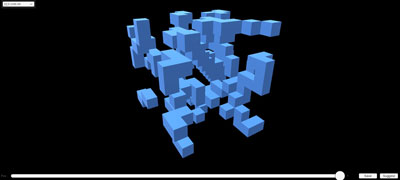

The user first assembles the components that generate the desired form in GH.

However, as the number of parameters increases, the interactions between parameters become more complex, and it is not easy to reach the desired form just by manipulating the sliders of individual parameters.

But in pBM, the user operates only one integrated slider, regardless of the number of original parameters.

AI does not present the form itself.

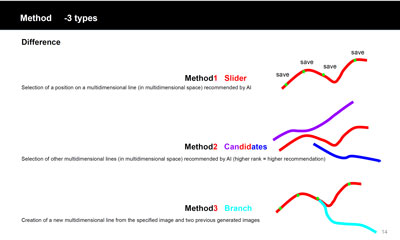

What is presented is not a form, but a slider, which is a straight line (equivalent to a curve in 3-dimensional space) in a multidimensional space with the number of dimensions of the parameter.

The AI "takes into account" (figuratively speaking) the user's selection history (weighted) and presents a single multidimensional line that is assessed as more appropriate.

Operation

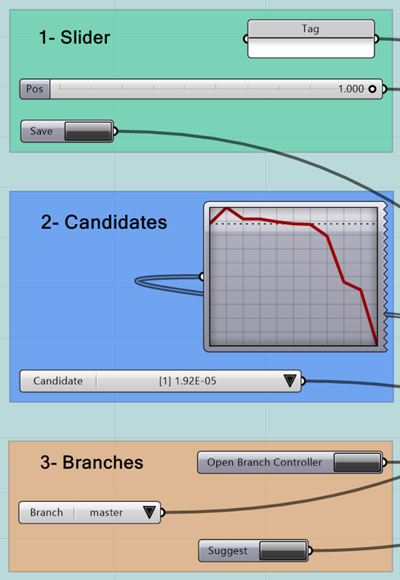

Top: Selection on parameters

Middle: Selection of multiple candidates

Bottom: Selection of branching (Right: non-Unity version)

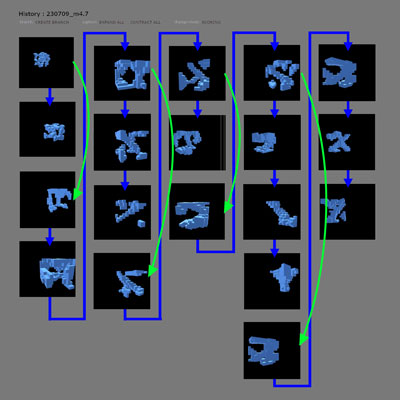

Blue line: original history

Green line: branch route

pBM plans to produce a public version, but as of 2024, it is not yet available.

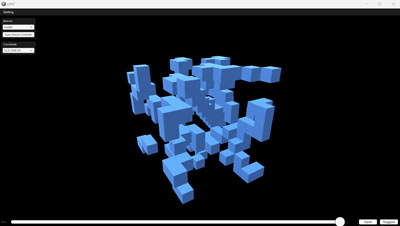

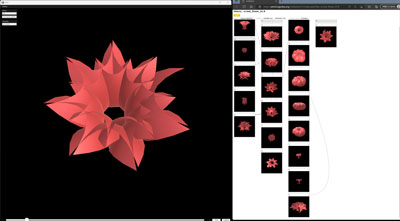

The executable version consists of two screens.

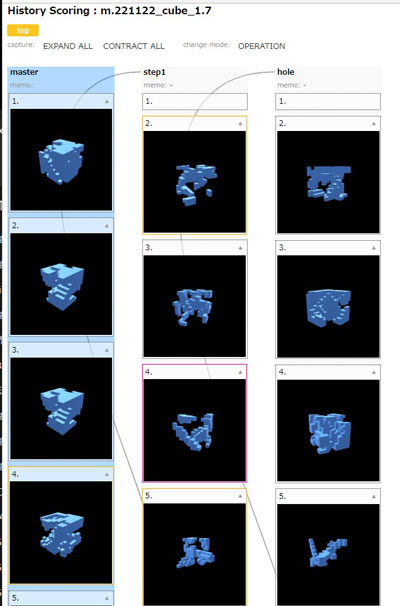

One is the "main page" for form display/operation, and the other is the "branch control page" for history display/selection.

On the branch control page, users can choose to display images or lists.

The forms to be operated on are created by GHs.

The program processing that makes the individual GHs correspond to the pBM must be done in advance. The first form displayed on the main screen is the form chosen by the AI by default.

The user moves the slider left or right to bring it closer to the desired form. As mentioned above, there is only one slider, so operation is simple.

When a form that is relatively close to the user's preference is displayed, the user clicks on the "save" button. The AI will then take into account the form the user has selected and display the next candidate slider.

The user then repeats this process of selection and presentation, and the system is expected to eventually arrive at a form that is close to what the user is looking for.

Simple and easy.

Note that what AI shows here is not the "Form".

As noted above, what the AI shows is not a single form, but a single parameter curve (a straight line in multidimensional space).

In the multidimensional space of the number of GH parameters, the AI gives weights to several points chosen by the user and, in compliance, presents the most "desirable" parameter straight line (a curve in cognizable space).

What is displayed on the main screen is the form with the highest fidelity to the instructions on that straight line/curve.

There are three ways in which the user can build up a series of responses with the AI, as shown below. (Both 2 and 3 are operations that are also used with "1")

The parameter = slider display on the main screen has the "exploitation edge" at the left end and the "exploration edge" at the right end. The left end is the most faithful to the instructions, and the further to the right, the greater the degree of leap away from the instructions. The user can choose the degree of "faithfulness-leap" to his/her own instructions on this straight line.

The reason for this approach is the recognition that users do not always know what they want.

Users (subconsciously) have a contradictory desire to have things go in the direction they "think they want" and at the same time to encounter something unexpected and unexpectedly good. (The author's rule of thumb)

If pBM cannot incorporate the latent intention to expect that betrayal as well, it cannot fulfill its prescribed purpose. However, if it betrays expectations every time, it will be abandoned.

Therefore, we left the degree of "fidelity/evasion" itself to the user's choice.

In this simple iteration, the user has another option.

That is the path of choosing the AI's second (or lower) best candidate.

The user can arbitrarily choose any candidate other than the one most recommended by the AI, based on the order of recommendation displayed.

On the other screen, the Branch Control page, the selected forms are displayed in order.

If, during the repetition of the "selection → presentation" process, the user feels that the previous form would have been better, he/she can go back to the form history and "go back" from there to the branching path.

This is to branch out from the middle of the trunk of the previous “selection → presentation” process, and to extend the branches that are different from the previous ones.

The program allows more detailed settings/selections in addition to the above 1-3, but in the running version of pBM, limited to these three.

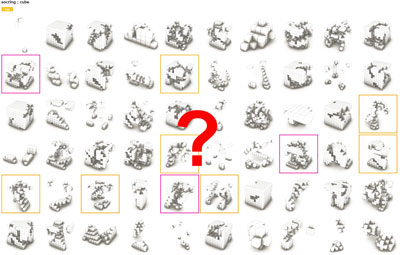

Trial Example

pBM Trial Short movie (Accelerated version)

→ cube

→ petal

→ vortex

Not only these examples, but any form that can be produced using Rhino/GH should be pBM-able.

Implementation

pBM has been implemented on a trial basis at Tamkang University in Taiwan, where the author previously served as a professor.

A workshop (MMpBMWS= MachinaXMind pBM Workshop) was held for 15 graduate students of the Department of Architecture at Tamkang University, where Professor Chen, one of the research collaborators, was teaching at the time.

Evaluation - by the Students

In the students' comments after the workshop, many of them stated that the pBM they tried presented them with the form they wanted (i.e., it was useful).

→ Students' Responses

(Original in Chinese, unedited / English translation by prof.Chen / Japanese translation by AI)

There is no way to know for sure whether these evaluations are “really so” or just an “illusion that they seem to be so“. If the person thinks so, we can only assume that the pBM was useful.

Because, in the first place, only the person himself can tell whether the form is good or bad.

If this is to obtain a proposal that satisfies the performance requirements of a "INDUCTION DESIGN" such as light, wind, and effective layout, then numerical evaluation is possible, and program excellence can be objectively assessed.

However, what pBM seeks to obtain is goodness for the individual, not goodness for everyone.

The goodness of the form presented in pBM is a relative value attributed to the individual, for which averages and statistics are irrelevant. Therefore, pBM is valid as long as the individual finds it useful.

Others cannot intervene in this process.

In this sense (as far as their opinions after this workshop are concerned), it may be fair to say that the effectiveness of pBM has been proven.

Since pBM_AI has demonstrated a certain contrasting effect compared to LLM-based AI, we can say that this is an accomplishment.

Because pBM_AI, an ultra-compact program that requires no teacher data training and very little power, can do what LLM-based AI, which requires huge resources (data and power=funds), cannot do yet.

The most important evaluation point, however, is whether it is usable. The author's own trial evaluation as a researcher/developer and user as an architect is a little different from that of a student. The pBM does not necessarily reach the performance expected. This may be due to the “Sarutobi effect” or “capricious action,” or it may be due to the characteristics of the AI used here.

Although I am both a developer and a user = an architect, the evaluation is inevitably since it is made through the eyes of an architect. Because hopes are high and technical problems are recognized.

That is the author's evaluation, but as pBM is a tool for individuals, the evaluation should be left to each user to judge for themselves.

Only for You...

One more thing.

It is often pointed out to me that if we collect the selection process of a large number of subjects, as in this WS or on the Internet, and use it as data, something like a pan-aesthetic sense could be extracted as a result, and we could present a form that many people "think is good/preferred".

Certainly, if the choice were to be made as to which label would be best for a mineral water to be released in the future, that might be effective.

However, this collective knowledge method may not be effective in presenting a form that has originality and discontinuity with the general trend as targeted by pBM (also described in the other section).

Just as "average faces" are not very attractive (as noted in another section), market principles and voting do not (I think) produce good designs. pBM aims to present "a form that is good for one specific user" rather than "a form that everyone seems to choose.

pBM is a tool "for the individual" = "for you".

And - 30 years of advance notice

As mentioned above, pBM aimed to be a tool that would anticipate the user's potential intentions and show them right in front of them, saying, "Is this what you are looking for?

In 2015, when pBM was launched in earnest, such programs were unknown.

(It is unconfirmed whether they ever existed.)

And in the last 9 years, things have moved in that direction.

As is well known, with the advent of so-called generative AI using LLM (large-scale language models)/NN (neural nets), the trend has quickly become mainstream.

As of 2024, generative AI cannot do what pBM can do. It would be better to say that it is not yet possible.

Not yet" = "eventually" ≒ "soon". LLMAI is truly a tour de force.

It is a learning process that requires a large number of GPUs and a huge amount of electric power (i.e., financial power) to learn an enormous mass of teacher data.

(Although a compact AI technique that surpasses LoRa will be developed in the near future.)

If "intentional anticipatory generation of 3D forms" and "mutual conversion of 3D forms ←→ commands" become possible, the performance of pBM can be achieved.

However, in the "image" region, where no functional conditions are imposed, even the current AI, which is based on the "playing the lottery, win eventually" method, can produce "something reasonable".

Ref. → Drawings page

Works with a larger series number (=more recent) include cooperative works between AI and human beings (=the author).

In addition to the form, the processing of requirements other than form (including function) will be added sequentially (although it will not be easy). AI that presents a design that satisfies requirements for "form + function + structure + construction + economy".

This is close to exactly what "THE INDUCTION CITIES / INDUCTION DESIGN" aimed for 30 years ago, in 1994.

Furthermore…

When that happens, the performance of the AI will go beyond "design support". It is already "design itself".

The role of pBMs and "the INDUCTION DESIGN" was to “support” designers.

However, if one says, “Create ~~,” and something “close” to one's desire (without individual instructions) automatically appears, with all necessary conditions satisfied, then this is now “the act of design“ itself.

Will "designers" be needed there?

Will there still be a role for "humans" in this world?

Already, humans are no match for AI in Chess and Shogi.

It is as if humans are allowed to play only on the palm of the AI's hand. Such a situation is not limited to the game domain.

Drug discovery, which involves formulating molecular formulas from a vast number of candidates that have the desired function, and the discovery of new scientific theories based on known physical phenomena and existing papers,are already being surpassed by AI.

In the "design" domain, there is a wide range of conditions to be solved, and their interactions are highly intertwined. (We have already experienced that with "the INDUCTION DESIGN")

It will not be "that easy" for AI, but there is no reason to say "no way.

Goldman Sachs reportedly lists architectural design as the third most likely job to be replaced by AI (2024).

During a chat with my collaborator, then Associate Professor Sato, I once asked him, “What will you do when AI is able to create AI programs?“ He replied, “If that happens, I will stop research“

(This is my recollection, so there may be some misinterpretation.)

This was still science fiction at the time, but now it is about to become a reality.

The question can also be asked as “What would you (as an architect) do when an AI (superior) design comes along?

What is requiredd

In the design of general equipment, it is OK if the required conditions are met. However, in architectural design, it is not enough to "solve the problem," is it?

This is because presenting a solution that satisfies the required conditions is a necessary condition for design, but not a sufficient condition.

If there are rooms with the necessary area and the ability to withstand earthquakes, that alone is still a "building," but it cannot be called "architecture.

Architecture requires more than the fulfillment of conditions; it requires something more. It must respond to people's feelings.

Architecture that makes you want to go there just by looking at it.

And a space that "makes you feel excited or at ease" when you enter it.

It is an action that moves people's hearts beyond the dimension of solutions, such as "beautiful," "wonderful," or "inspiring. This is what (the author) called a dream, and assumed that machines cannot dream...

I had Gemini/Bing/Firefly as of 2024 draw about 50 “dreaming machines” with different prompts, and even the best one was this good.

All of the AIs wanted to go in this kind of standard direction that we have seen somewhere before.

Even though all of them are highly skilled in drawing.

It seems that the current AI has not yet developed “imagination/creativity.

AI cannot go out of the framework of the teacher data it has learned.

It seems that the teacher data that helped AI to learn is at the same time a "wall" that confines AI there.

(That was one of the reasons why pBM used an AI that did not require supervised data learning)

Although it will not be long before a technology that can overcome this wall appears on the scene -

Things that move our hearts

People are "moved" by works of art, music, and architecture.

At the same time, we are also moved by natural scenery.

Our hearts vibrate when we see a mountain peak dyed a deep red by the setting sun, when we see a wave breaking as if we are looking up at it, or when we see the wings of a dragonfly with exquisite Voronoi pattern shining through the light.

These emotional vibrations resonate with those of seeing a Pyramid, Bernini's Santa Teresa, or Hokusai's The Great Wave, which is not nature but the hand of man.

If humans are moved by "natural phenomena, which should be nothing more than the composite action of physical events", it is no wonder that humans are moved by "the products produced by AI, which is a composite of arithmetic operations".

That moment,

When you or I are moved by the form that AI shows us, that's when Kikai has obtained a “dream, AI's Insurmountable Obstacle” by AI

Really? - The Dreaming Machine

This is another routine Q&A that I have mentioned several times in other publications,

In the past, when I was advocating "the INDUCTION DESIGN" and "Algorithmic Design, I was often asked the the standard question: " Will we no longer need designers?"

To this question, I always answered as follows.

" It is the privilege of human beings to dream.

Machines = programs help us apply that dream to reality.

But machines do not dream.

Machines can "solve" requirements = conditions, but they cannot "solve" dreams = imagination, which are objects without conditions.

This is because an image is not a "condition that is there," but an " vision that is nowhere to be found"

In my book " (ALGODEX / ALGOrithmic Design EXecution and logic 2012) ", I also wrote

" What is the purpose of the creation?

What does good mean?

It is up to the human being to transform this “what” into something tangible.

This is the area where the unknown (latent) abilities that only humans can possess are (should be) activated in the 'design=generation' process "

And in the “AD + AItect : When machines have dreams, what will humans see?” chapter, the author answered.

Kronos / Saturnus' dream

I like to draw with my hands.

The scene that is created by my own hands, freed from the constraints of thought, is a “fresh” surprise to me as well.

But...

Now, I would respond.

" When machines start to dream, the role of human beings may come to an end.

Unless human beings activate a new ability (yet to be seen) that goes beyond even "dreams "

(There are people who can learn an unknown language in a few days or visualize the mathematical structure of a subject.

- Some cases are called acquired Saban syndrome or gifted )

Will humans really be able to develop such "super" abilities (that AI cannot catch up with) ?

History has proven that humans are capable of creating unruly beings.

This nature is probably written into the basic human program (human OS).

Fire, agriculture, electricity, nuke.. Humans cannot resist the temptation to obtain the “power” to expand their own hands and alter the environment around them.

The god of agriculture, Kronos=Saturnus=Saturnus, was destined to strike back when he could no longer control what he had created.

The grace left to mankind is not so long.

Postscript:

The conception of pBM dates back to around 2000.

There was an unsolvable problem in "Guided Cities/Algorithmic Design.

It was a task for which evaluation criteria could not be written down, such as "obtaining a preferred form.

Thinking that only AI could solve such a problem, I started a "flow program" using NN and genetic algorithms together with Mr. Chiba, an SE who had collaborated with me on the Guided City project.

The development of this AI program was supported by the MITOU programs for the next generation IT innovation leaders by IPA , and was used in the implementation design of the "TX Kashiwanoha Campus Station" in 2004.

The station was completed, but the program's capabilities were not satisfactory.

At that time, LLM was not yet known and GPUs were limited to some gaming applications.

Feeling the limitations of NN at that time, we left it as it was for a while. Later, I decided to try again and conceived of pBM using NN, which I started to work on together with Mr. Chiba.

When I hosted the international symposium AQS "Can AI make designs?" in 2015, Mr. Sato, a young and brilliant AI researcher who was present at the symposium, approached me, which triggered the start of the pBM's new organization..

The team decided to proceed using the new AI developed by Mr. Sato instead of the conventional NN.

Mr. Zheng, who is skilled in JavaScript and other programs, joined the team, and Mr. Chen, who teaches IT architectural education in Taiwan, joined remotely.

Mr. Chiba left the team midway through the project due to his busy schedule as president of two more companies, but pBM was subsequently carried out with the support of CREST by JST.

The pBM is the fruit of the efforts and abilities of these The pBM is the fruit of the efforts and abilities of these " like-minded" individuals ".

I would like to express my deep appreciation for the close cooperation and tremendous efforts of the team members, who shared the spirit of "making today's impossibilities tomorrow's possibilities.